In the fast-evolving world of artificial intelligence, a new controversy has emerged, putting tech giants OpenAI and Google in the spotlight.

The heart of the issue? The use of YouTube content for training sophisticated AI models like OpenAI’s ChatGPT and Sora, sparking a debate over ethical AI development and copyright concerns.

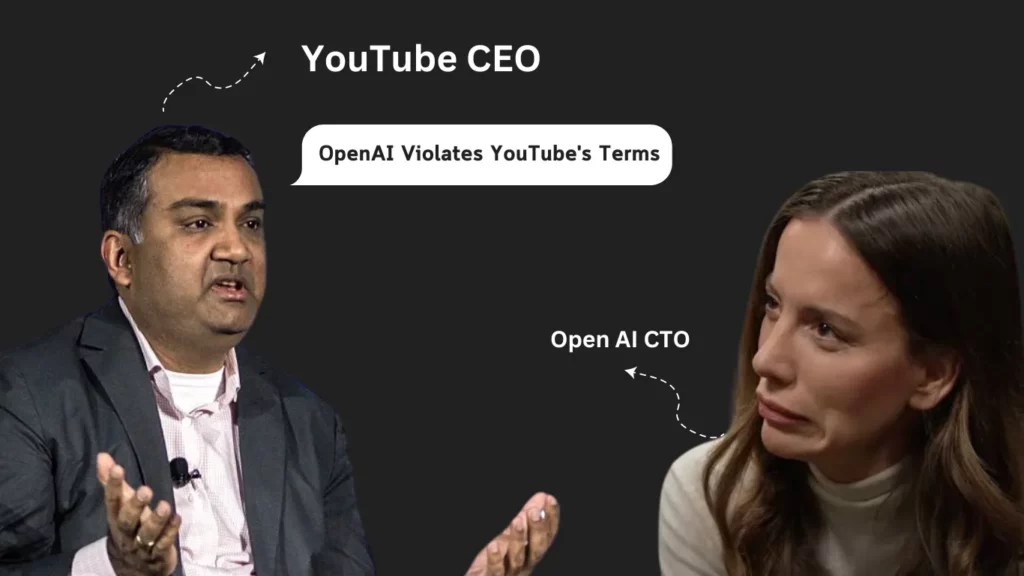

YouTube’s CEO, Neal Mohan, has issued a stern warning to OpenAI, signaling potential violations of the platform’s terms of service.

This move underscores a growing concern about the ethical boundaries of AI research and the responsibilities tech companies have in navigating these uncharted waters.

The conversation took a turn when Mira Murati, CTO of OpenAI, admitted to uncertainties about the origins of Sora’s training data, raising more eyebrows about the practices behind these advanced technologies.

The story further unfolds with reports of OpenAI transcribing over a million hours of YouTube videos to refine its models, a strategy that skirts the legal boundaries of copyright law. This revelation has prompted Google to reiterate its stance against unauthorized data scraping, emphasizing the technical and legal measures in place to protect content creators’ rights.

As the AI community grapples with the challenge of sourcing high-quality training data, companies are exploring alternative strategies, including the generation of synthetic data and curriculum learning.

However, these solutions are still in their infancy, and the legal and ethical debates surrounding AI training practices are far from over.

This ongoing saga highlights the intricate dance between innovation and regulation, as tech giants strive to lead in AI development while navigating the complex landscape of copyright laws and ethical standards.

Angelou Berrisch