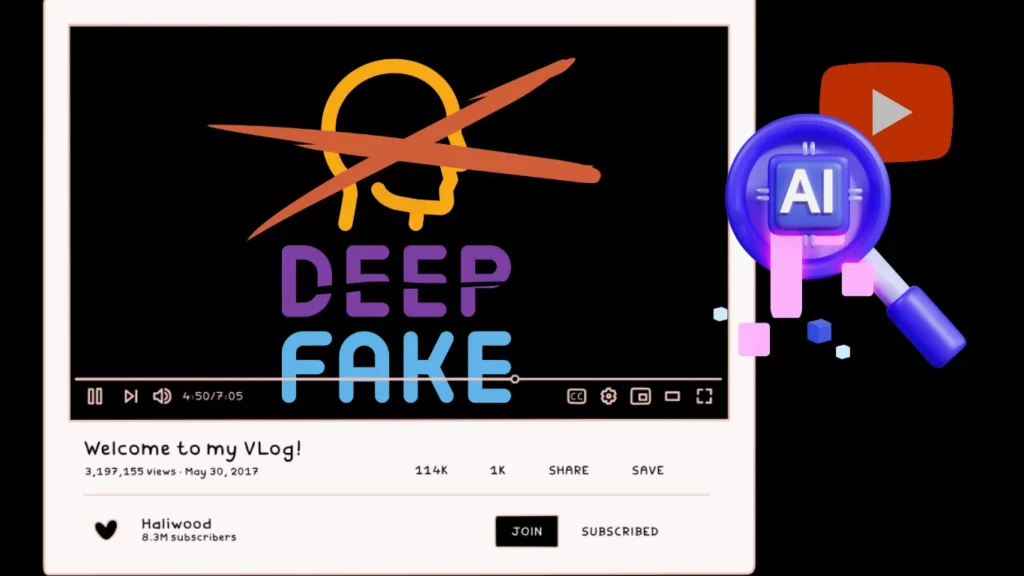

YouTube is stepping up its efforts to combat cyberbullying and harassment from AI Deepfake Content by introducing stricter policies.

The platform will no longer tolerate content that “realistically simulates” minors and victims recounting violent incidents or their deaths.

This move directly addresses a concerning trend in true crime circles where AI-powered depictions, particularly of children, describe gruesome violence using childlike voices.

Under the updated policy, violating channels will receive a strike, leading to the removal of offending content and temporary restrictions on user activities.

For instance, a first strike results in a week-long video upload restriction, with escalating penalties for repeated violations within 90 days, including the potential removal of the entire channel.

YouTube’s commitment to tackling this issue aligns with recent efforts by various platforms to regulate AI-generated content.

TikTok, for instance, now mandates creators to label AI-generated content.

YouTube itself has implemented strict rules for AI voice clones of musicians, demonstrating a proactive approach to maintaining a safer and more responsible content environment.

As users and content creators, it’s crucial to be aware of these changes and comply with the updated policies to ensure a positive and secure online space for everyone.